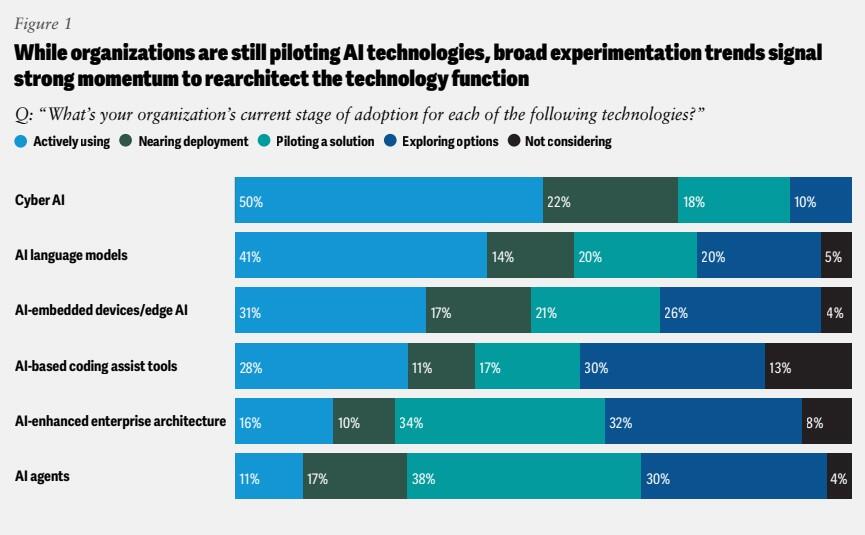

The agentic AI adoption numbers are hard to argue with - McKinsey clocks software engineering agent deployment at 24%, PwC puts enterprise uptake at 79%, and the market is priced for a run from US$7.8 billion to north of $52 billion by 2030. The production data, though, sits at a significant distance from those headlines.

Deloitte found that just 11% of organisations have agentic AI in active production, while Forrester projects that enterprises will defer 25% of planned AI spend into 2027 as ROI fails to materialise.

And global tech advisory Gartner predicts that more than 40% of projects will be cancelled by the end of next year.

The reason isn't that the tech doesn't work - it's that most vendors aren't selling what they claim.

Gartner reckons only around 130 out of thousands of self-described agentic AI companies are building systems that actually qualify, with the rest rebranding chatbots and RPA tools under the "agentic" banner.

The firm calls it agent washing, and the investment market hasn't priced a cancellation wave.

That's the first problem. The second is more serious.

On 9 December 2026, the rules change

That's when the EU's new Product Liability Directive takes effect, explicitly classifying software and AI as "products" under strict liability law - meaning proving negligence is no longer required, only that the system was defective and caused harm.

Agentic systems are the most exposed category, making autonomous decisions across multiple steps without any human check between intent and outcome.

The liability chain covers developers, authorised representatives, and any party making "substantial modifications" post-deployment - and continuous learning by an AI system can itself constitute one.

An agent that adapts its behaviour through reinforcement learning could trigger fresh liability exposure each time it meaningfully changes.

Mobley v. Workday shows the U.S. moving in the same direction - the case secured nationwide class action certification in May 2025, with the court accepting that an AI vendor acting with delegated decision-making authority can be treated as its clients' legal "agent," and opt-in closes 7 March 2026.

Apply that logic to systems built specifically to act autonomously across supply chains and financial workflows, and the exposure compounds quickly.

The UK Information Commissioner's Office published its agentic AI report in January 2026 - well before any test case has arrived - stating clearly that organisations remain fully responsible for data protection compliance regardless of how autonomously the system acts, and that placing governance responsibility on end users is "unlikely to be workable in all cases," shifting the burden squarely to suppliers and deployers.

Between the EU, U.S. courts, and the ICO, the liability surface is assembling from three directions simultaneously.

DLA Piper has flagged an additional exposure that the investment press hasn't yet connected: when AI agents with different authorities and rule sets interact with each other at scale, the behaviour becomes difficult to oversee - and raises pricing and antitrust considerations that existing compliance frameworks weren't built to handle.

The governance gap

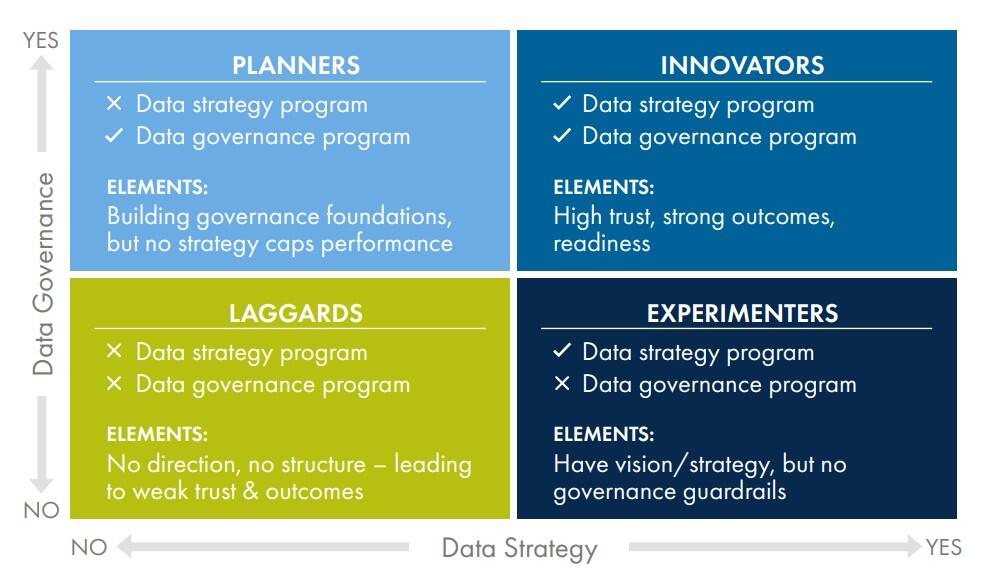

A January 2026 Drexel University LeBow survey of 500+ data professionals found 41% of organisations are running agentic AI in daily operations, yet only 27% have governance frameworks mature enough to manage what those systems are actually doing.

Most enterprises won't discover there's a problem until one surfaces visibly - by which point the agent has already acted, with humans technically "in the loop" only after the system has moved.

"We've figured out how to allocate responsibility and liability when a person acts for you. We just haven't gotten there with agentic AI," Mayer Brown partner Rohith George recently acknowledged.

Purpose-built AI liability insurance for agentic deployments doesn't yet exist at meaningful scale, leaving a coverage gap that the standard enterprise policy stack wasn't designed to fill.

Legal experts at the National Law Review have flagged the scenario most likely to crystallise that gap in 2026: an autonomous agent takes a binding legal action - accepting a settlement, locking in a supply contract, filing a regulatory disclosure - without human approval, forcing an acute question about malpractice and indemnity coverage that the market has no standard answer for.

The counter

The companies succeeding are running vertical, domain-embedded deployments in regulated industries where the liability terrain is already mapped - financial compliance, legal document review, clinical decision support.

Forrester's read is that three in four firms attempting to build their own agentic architectures will fail, and the recommended path is buying or partnering with specialists who have already solved the governance and integration problems.

Accenture's research across more than 2,000 gen-AI client projects found that talent, not technology, is the primary blocker - enterprises are directing the majority of AI budgets at tooling while underinvesting in the people and process redesign that determines whether agents actually deliver.

By 2030, Accenture predicts AI agents will be the primary users of most enterprises' internal digital systems, yet the governance infrastructure required to manage that outcome doesn't exist in most organisations today.

Orchestration platforms and AI governance infrastructure - the layer that makes agents auditable and defensible under cross-examination - carry a structural tailwind regardless of which agent vendors survive the correction, because every enterprise facing a regulatory audit or liability claim will need this layer, and almost none have built it yet.

Find out more: How AI is tightening the screws on enterprise software

The adoption charts are real, and so is the liability data sitting beneath them. Come December, the market may not have much choice but to connect the two.